AI Applications in SMB

AI (Artificial intelligence) is making waves across the business world. All sizes of businesses could get benefit from AI. So even SMBs (Small and Medium Businesses) are now able to build internal data warehouses or knowledge bases by learning to harness the power of automated systems thanks to emerging software tools and modern hardware platforms.

AI is the ability of machines to mimic human cognition. It can be programmed to solve problems in ways that involve work similar to perception, reasoning, and learning. For example, set up chatbots using existing resources such as FAQs, and scripted responses to provide the most appropriate responses to human user queries to improve customer service. In addition, AI can help businesses make informed decisions about what customers really want to build better marketing campaigns and customer relationships.

With the transition into AI transformation, there will be time and money investment. But it’s definitely possible for SMBs to afford and take advantage of available AI technology. Moreover, modern hardware platforms, including storage tailored for SMBs, play a key role in affordable AI solutions.

How Does Storage Work for AI?

AI, machine learning, and deep learning require the storage of large amounts of data for subsequent processing. Certainly, large amounts of data help train simulations and models to inform decisions and improve the validity and accuracy of any insight. The data storage and computing requirements of AI workloads are very different from general-purpose. These applications depend on data to function and require high-performance, reliable, and scalable data storage solutions. As part of an AI workload, such storage must contain some key features to be effective.

- Scalability: AI requires to process large amounts of data. So more and more AI data is collected, storage must be scalable up to petabyte or more. Additionally, storage for AI workloads must respond quickly to these demands without hindering them.

- Rapid Access: AI is built on a complex infrastructure. Storage, applications, and data collection devices can be distributed across different environments. Although efficiency is a key, fast storage will not cause a bottleneck in the operation of AI.

- Latency: I/O latency is important for building and using AI models because data is read and re-read multiple times. So reducing I/O latency can reduce AI training time by days or months.

- Throughput: The training process uses a lot of data, usually measured in terabytes per hour. Therefore, delivering this level of random access data can be challenging for many storage systems.

- Cost-Effectiveness: The scope and scale of AI applications are various and dynamic. Storage for AI should be flexible to start from small but scale by demand cost-effectively while sustaining the similar price performance ratio. At the same time, avoid purchasing and maintaining unused resources with proper storage management or scalability.

In conclusion, the combination of effective storage, scalability, and cost management is the foundation of the storage for AI applications.

What Are the Main Points of Storage for AI?

Storage for AI requires specific system configurations, especially in terms of optimizing hardware and data transfer infrastructure. Storage will be part of a high-performance computing environment composed of several key components. These components will include the following.

- High Speed Connectivity: High bandwidth front-end supports heavy workloads related to AI and machine learning. So properly configured connectivity through InfiniBand, Fibre Channel, or iSCSI to extract data from fast storage to prepare data for AI algorithms at scale is critical to building advanced AI. Although InfiniBand offers the highest network performance, they require proprietary equipment and much higher cost of acquisition. The widely deployed 25 GbE / 10 GbE iSCSI or 32 Gb / 16 Gb Fibre Channel with more friendly cost is recommended to start with for SMB.

- Flash Storage: For such massive workloads, it is important to provide fast storage retrieval at the hardware level. However, Storage close to the powerful server can serve as an instant storage medium for AI applications that require fast data access.

- Capacity Storage: Mass data can also include long-term storage beyond flash to save costs. Thus, flash storage is responsible for fast computing, and capacity storage retains long-term data. Given the rapid growth of data, the capacity reserved up to 10 PB in the future is relatively sufficient in the SMB field.

- Performance Threshold: The rapid processing of data from storage to AI and machine learning algorithms requires hardware capable of handling massively parallel computing tasks. The processing of random read and reread should be higher while the latency should be lower. The modern SMB SAN storage supporting random read at least 500K IOPS under 1ms latency offers abundant room to accommodate various AI applications in SMB.

When it comes to AI storage, speed and capacity are the main factors to consider.

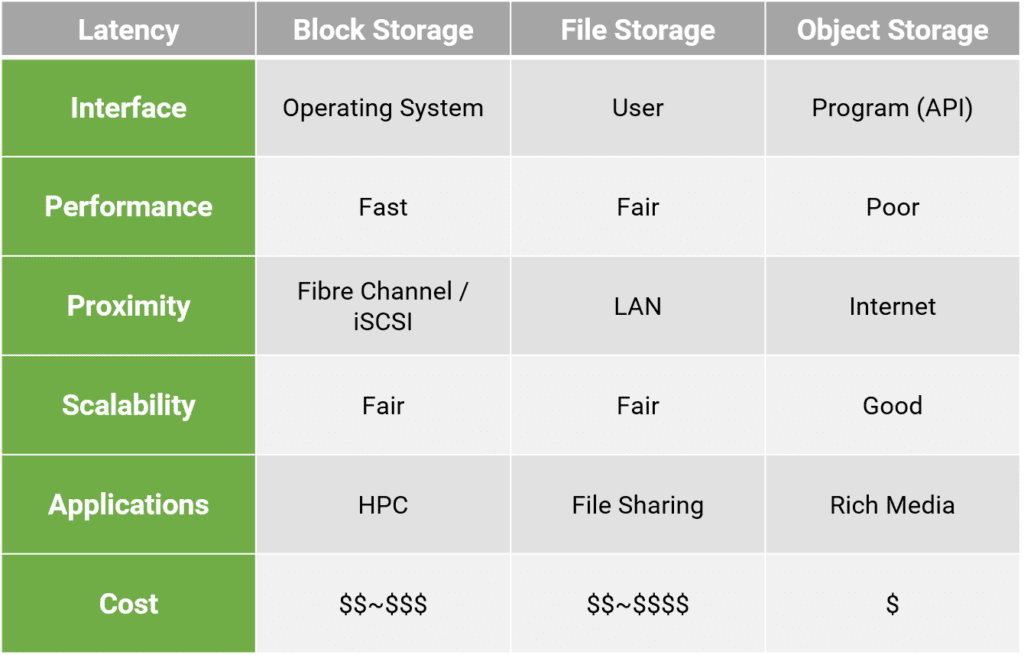

Block Storage is Best-Fitted after Comparison

To sum up the above, different storage products have their own advantages and disadvantages.

- Block Storage: Block-based storage provides the lowest latency and maximum throughput for I/O, but limited in scalability. With 3rd-party parallel filesystems such as BeeGFS, it could offer the ideal AI solution for SMB with modern SAN storage which could scale up to 10 PB cost effectively and space efficiently.

- File Storage: File-based storage saves data as single information in a folder for organizing with other data. But historically, file-based products have not delivered the highest levels of performance.

- Object Storage: Object-based storage assigns each data item as an object and combines with associated metadata to form a storage pool. It provides the highest scalability, but often doesn’t provide the best throughput or the lowest latency.

Given the various tradeoffs, the goal for AI in SMB is to achieve the maximum speed with the lowest cost. AFAs (All Flash Arrays) is no doubt the ideal storage solution. Coupled with the commonly used protocols such as 25 GbE / 10 GbE iSCSI or 32 Gb / 16 Gb Fibre Channel to directly connect multiple servers, it will further reduce the cost without deployment of costly and complicated networking equipment. Open source parallel file systems such as BeeGFS also contribute a lot for SMBs to easily adopt AI.

Building Block Examples

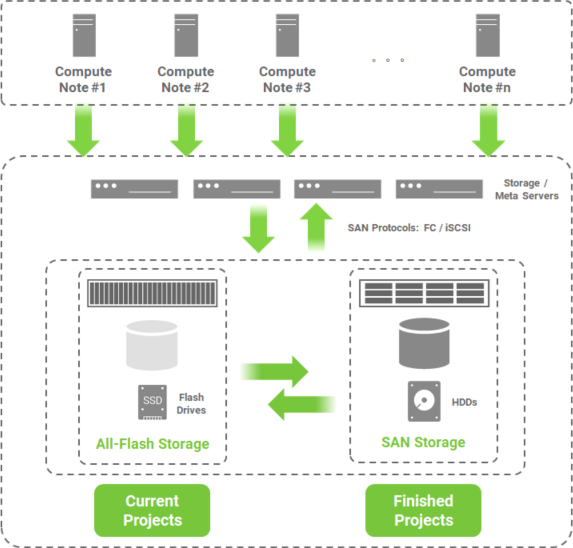

Here is an example of the building block for SMB. For the current project, use the active dataset as the training process in AFA. After which the results and datasets are moved to lower-cost capacity storage for long-term preservation.

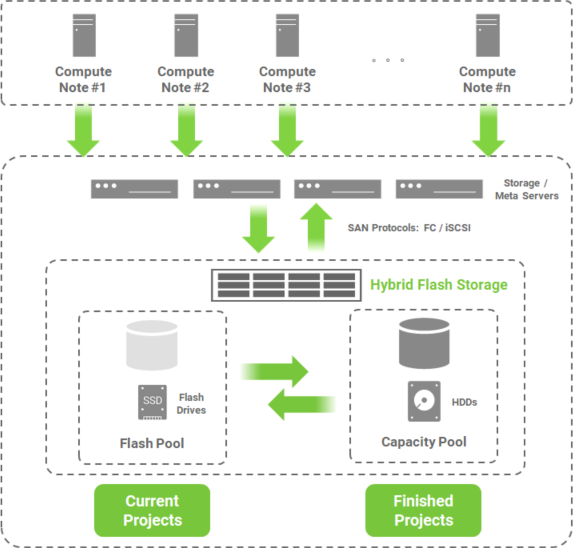

Take another example of cost optimization. Hybrid storage instead of AFA and capacity storage is a cost-effective alternative for AI in SMB as the combination of the high-performance SSD and the best price-capacity ratio HDD. Process the current dataset in the flash pool and move it to the capacity pool when the project is complete. Performance is also acceptable if the platform and disk drive are well matched.

Conclusion

Applications that use or train AI cannot rely on traditional storage to function properly. These applications require high performance, high availability storage from which they can continuously ingest large amounts of data to facilitate learning and growth. Based on the analysis and recommendations above, block storage is suitable for AI in SMB, whether using AFA plus SAN storage or hybrid block storage combining the two. With BeeGFS, a popular parallel file system developed and optimized for high performance computing, form a competitive AI training environment.